Developing a Voice First User Experience

Understanding the Core Principles

Voice-first design represents a fundamental shift in how we approach user interfaces. Instead of relying on traditional visual elements like buttons and menus, this method focuses on natural human conversation. The goal is to create interactions that feel intuitive and effortless, almost like talking to another person. Designers must deeply analyze how people naturally communicate to build systems that anticipate needs and respond appropriately.

This approach changes everything about how information is structured and presented. Rather than forcing users to navigate complex menu trees, voice interfaces should understand and respond to complete thoughts and intentions. The entire user journey needs rethinking from the ground up to support this more natural interaction style.

Crafting Conversational Flows

Creating truly natural conversations with technology requires careful attention to detail. The system shouldn't just recognize words - it needs to understand meaning, context, and even implied requests. This involves mapping out countless potential user phrases and designing appropriate, helpful responses for each.

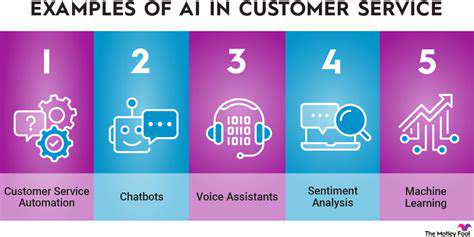

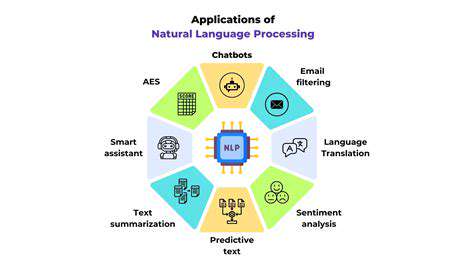

Advanced natural language processing capabilities are essential here. The system must handle regional expressions, casual speech patterns, and the subtle nuances that make human conversation so rich and complex. Without this depth of understanding, interactions will feel robotic and frustrating.

Prioritizing Accessibility and Inclusivity

Voice interfaces naturally lend themselves to more accessible design, but this requires conscious effort. Developers must consider users with different abilities, speech patterns, and technical comfort levels. The system should adapt to various accents and speaking styles while providing clear feedback.

Multiple interaction methods should be available, including text alternatives for voice commands. Clear, concise responses help all users understand the system's actions and correct any mistakes easily.

Considering the Role of Context and Intent

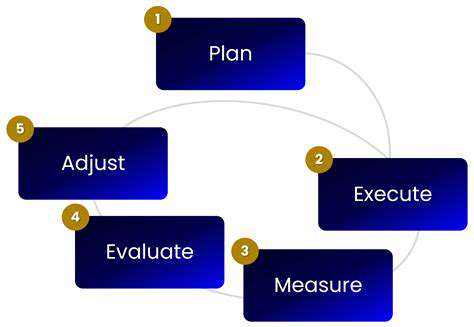

Truly effective voice interfaces go beyond simple command recognition. They understand the situation surrounding each request and adjust responses accordingly. This means tracking conversation history, environmental factors, and even emotional tone.

For example, a navigation system should account for background noise when interpreting directions. A smart assistant should remember previous requests to provide coherent follow-up responses. This contextual awareness separates basic voice recognition from truly intelligent interaction.

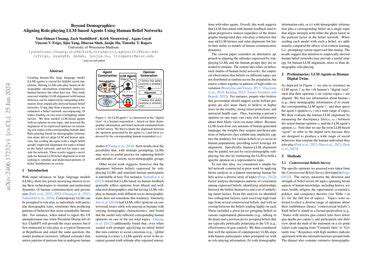

Evaluating and Iterating on Voice-First Design

Developing voice interfaces requires continuous refinement through testing. Real user feedback reveals how people actually interact with the system versus how designers imagine they will. Regular testing cycles help identify confusing elements and opportunities for improvement.

Success metrics should focus on naturalness of interaction and task completion rates rather than just technical accuracy. The system should evolve based on how real users respond to it in everyday situations.

Prioritizing Conversational Flow and Natural Language Processing (NLP)

Understanding the Importance of Conversational Flow

A well-designed conversational flow makes interactions feel natural and productive. Good flow creates a rhythm that helps users feel understood and guides them smoothly toward their goals. When the rhythm breaks - whether through awkward pauses, misunderstood phrases, or ill-timed responses - the entire experience suffers.

Building Rapport Through Natural Dialogue

Authentic conversation builds trust between users and technology. Systems should demonstrate active listening through appropriate responses and natural follow-up questions. The tone should be helpful without being overly familiar, professional without being cold.

Allowing for natural digressions and topic shifts makes interactions feel more human. The system should gracefully handle interruptions and return to the main thread when appropriate.

Managing Transitions and Avoiding Disruptions

Smooth topic transitions maintain engagement and comprehension. Effective transitions use natural language cues that signal shifts in subject or intent. The system should provide clear markers when moving between different functions or information types.

Responding Effectively to Different Communication Styles

Adapting to various speaking styles demonstrates true conversational intelligence. Some users will be terse and direct; others more verbose. The system should adjust response length and detail level accordingly while maintaining clarity.

Enhancing Engagement and Keeping the Conversation Going

Thought-provoking questions and relevant follow-ups demonstrate active engagement. The system should know when to dig deeper and when to move forward. Balancing efficiency with thoroughness creates satisfying interactions that users want to repeat.

Testing and Iteration for Continuous Improvement

Understanding the Importance of Testing

Comprehensive testing reveals how real users interact with voice systems in unpredictable ways. Early testing catches issues before they frustrate users. Testing should cover not just technical performance but also emotional response and perceived usefulness.

Iterative Design and Feedback Loops

Each testing cycle should inform specific improvements. Watching users struggle with certain phrases or commands provides concrete opportunities for refinement. The best systems evolve through dozens of small adjustments based on real usage data.

Voice Command Accuracy and Reliability

Recognition must work consistently across diverse voices and environments. Testing should include challenging conditions like background noise and overlapping speech. The system should gracefully handle recognition failures with helpful recovery options.

Evaluating Conversational Flows and Natural Language Processing (NLP)

Testing should verify the system understands not just individual commands but complete conversations. Can it track context across multiple turns? Does it recognize when the user changes subjects or asks follow-up questions?

Usability Testing and User Feedback

Direct observation reveals problems users might not articulate in surveys. Watching facial expressions and body language during testing often speaks louder than verbal feedback. Testing with diverse user groups ensures the system works for everyone.

Performance Optimization and Scalability

The system must maintain responsiveness during peak usage. Testing under load reveals bottlenecks that could frustrate users. Response times should remain consistent regardless of user volume.

Accessibility and Inclusivity Considerations

Testing with disabled users ensures the system works for all. Does it accommodate speech impairments? Provide adequate alternatives for hearing-impaired users? Handle assistive technologies gracefully?

Read more about Developing a Voice First User Experience

Hot Recommendations

- Personalizing Email Content with User Behavior

- Geofencing for Event Attendance Tracking

- Reputation Management on Social Media

- UGC Beyond Photos: Videos, Testimonials, and More

- The Future of Data Privacy Regulations

- Accelerated Mobile Pages (AMP) Benefits and Implementation

- The Future of CRM: AI and Voice Integration

- Google Ads Smart Bidding Strategies: Maximize Value

- Common A/B Testing Pitfalls to Avoid

- Local SEO Strategies for Small Businesses