Multivariate Testing for Complex User Interfaces

Designing Experiments for Maximum Impact

Experimental design determines result validity and reliability. Proper test construction prevents bias and ensures statistical significance. Key considerations include test scope, duration, participant numbers, and control group establishment. Well-planned experiments guarantee that behavior changes result from tested variables rather than external influences. This demands meticulous preparation and execution.

Analyzing the Results Effectively

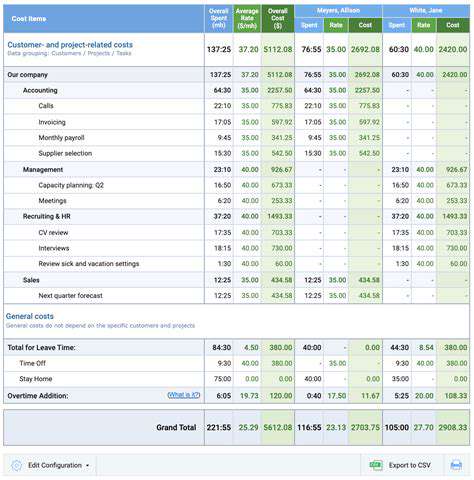

Effective MVT result analysis identifies top-performing variations. Specialized multivariate analysis tools help decipher complex datasets. Distinguishing statistically significant differences in conversion rates, click patterns, or engagement metrics is crucial for practical application. Proper analysis transforms raw data into strategic insights.

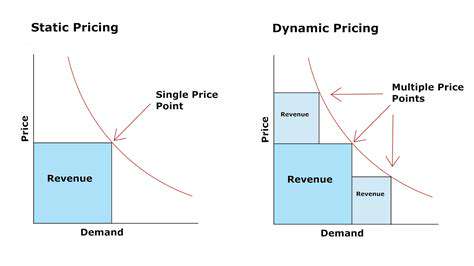

Interpreting and Implementing Findings

Result interpretation involves assessing both statistical significance and real-world implications. Evaluate how findings affect critical business outcomes like revenue generation or customer conversion. The transition from data to implementation requires balancing statistical evidence with practical considerations.

Overcoming Challenges in Multivariate Testing

While powerful, MVT presents unique difficulties. Complex designs, result interpretation complexities, and potential data distortions require attention. Successful testing demands rigorous planning, statistical knowledge, and quality control measures to navigate these challenges effectively.

Scaling Multivariate Testing for Larger Campaigns

Expanding MVT across larger campaigns introduces management complexities. Robust platforms and methodologies maintain efficiency at scale. Automation and optimized workflows become essential as testing expands to preserve control and effectiveness.

Implementing and Interpreting Multivariate Testing Results

Implementing Multiple Regression Analysis

Multiple regression analysis implementation begins with thorough data collection and preparation. This process involves selecting response and predictor variables, ensuring data integrity, and addressing incomplete data points. Data transformations like logarithmic adjustments might be required to satisfy model assumptions regarding linear relationships and consistent variance. Initial exploratory analysis helps reveal variable relationships. Software selection (R, SPSS, etc.) significantly impacts analysis execution.

After preparation, model specification determines which variables to include, considering their theoretical relationships. Multicollinearity—when predictors correlate strongly—requires special attention as it can compromise result reliability. Following model specification, coefficient estimation using methods like Ordinary Least Squares (OLS) occurs. Proper interpretation of these coefficients reveals each predictor's effect on the outcome variable.

Interpreting Regression Coefficients

Regression coefficient interpretation forms the core of multiple regression analysis. These values indicate how much the outcome variable changes with a one-unit predictor change, assuming other factors remain constant. The coefficient's sign and size reveal relationship direction and strength between variables.

Positive coefficients suggest direct relationships, while negative values indicate inverse relationships. Measurement units for both variables must be considered when assessing coefficient magnitude's practical significance. A coefficient's importance depends on the research context and underlying theory. Significance testing (t-tests) helps determine whether observed relationships likely reflect true effects rather than random variation.

Evaluating Model Assumptions and Goodness of Fit

Model assumption verification ensures result validity. Key assumptions include linear relationships, consistent error variance, error independence, and normal error distribution. Violations can distort coefficient estimates and obscure true variable relationships.

Model fit evaluation assesses how well predictors explain outcome variation. Metrics like R-squared and adjusted R-squared quantify explanatory power, while residual plots identify potential issues. A high R-squared indicates strong explanatory capability but doesn't prove causation. Residual analysis detects assumption violations, guiding model refinement for improved reliability.

Beyond the Metrics: Considering User Experience and Feedback

Understanding User Experience in Multivariate Testing

While MVT excels at optimizing conversion metrics, user experience (UX) considerations often receive insufficient attention. Conversion rate improvements might coincide with negative UX impacts like increased bounce rates or navigation difficulties. Comprehensive evaluation should assess both statistical performance and user satisfaction across variations.

User behavior analysis tools (heatmaps, scroll maps) reveal interaction patterns with different versions, identifying confusing or frustrating elements. This qualitative dimension complements quantitative metrics for holistic evaluation.

Qualitative Feedback for Deeper Insights

Open-ended user surveys, interviews, and comment analysis provide context for numerical data. These methods uncover user perceptions and pain points that metrics alone might miss, such as unclear messaging or layout confusion.

The Importance of A/B Testing for User Experience

Combining A/B testing with MVT isolates specific UX impacts. Comparing original and modified versions of single elements (buttons, colors, layouts) provides focused UX insights. This approach clarifies how individual changes affect user interaction beyond multivariate results.

Iterative Improvements Based on User Feedback

Continuous testing cycles incorporating user feedback drive ongoing optimization. Regular UX assessment ensures testing evolves beyond initial success metrics to address the complete user journey. This process creates progressively refined variations that balance performance and usability.

Integrating Feedback into Future Testing Strategies

User experience findings should directly inform subsequent test planning. Insights guide hypothesis development, improvement prioritization, and user-centered design approaches. Sustained feedback integration creates optimization strategies that enhance both conversions and long-term user satisfaction.