Optimizing for Conversational AI Assistants

Personalization and Contextual Awareness

Personalization for Enhanced User Experience

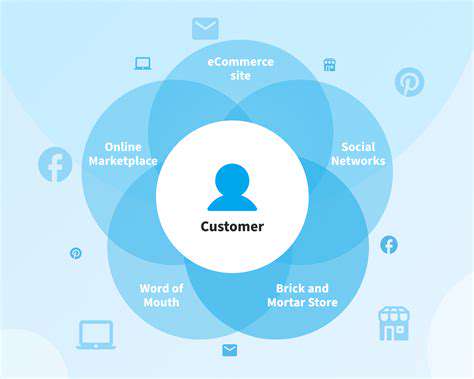

Creating a genuinely engaging conversational AI assistant hinges on personalization. By analyzing individual preferences, past interactions, and context, the assistant can deliver customized responses and suggestions. This tailored method enhances user satisfaction as it anticipates needs without requiring explicit instructions. For example, a user who often searches for local dining options will receive location-specific restaurant recommendations instead of generic ones. Such personalization builds trust and encourages repeated use.

Beyond recommendations, personalization also involves adapting the assistant's tone and communication style to match the user's preferences. This subtle adjustment creates more natural interactions, making the assistant feel less robotic and more like a supportive companion.

Contextual Awareness for Accurate Responses

Delivering precise and relevant responses depends heavily on contextual awareness. The assistant must grasp the conversation's history, the user's current context, and the nuances of their requests. For instance, when asked, What's the weather like tomorrow? the assistant should automatically consider the user's location, inferred from prior interactions or real-time data. Without this awareness, it might provide irrelevant or overly general information.

Sustaining context across multiple interactions is vital for a seamless experience. Remembering past requests allows the assistant to predict future needs, streamlining tasks and boosting user satisfaction.

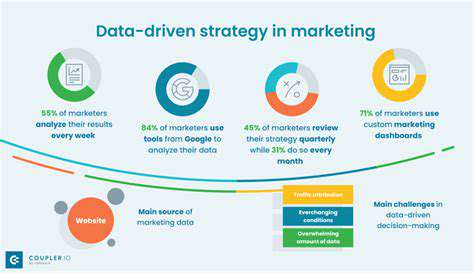

Leveraging Data for Improved Personalization

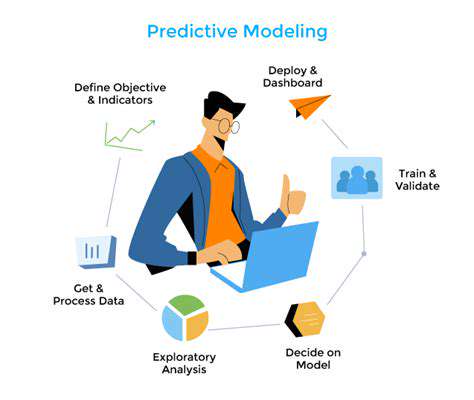

Effective personalization and contextual awareness rely on robust data analysis. This includes reviewing past interactions, user preferences, and real-time details like location. Training the assistant on diverse datasets helps it identify patterns and predict needs accurately. A data-driven approach ensures continuous refinement, allowing the assistant to evolve with user preferences.

However, safeguarding data privacy is non-negotiable. Strict compliance with regulations, transparent policies, and user consent mechanisms are essential to maintain trust and foster positive relationships.

Optimizing for Natural Language Understanding

Natural language understanding (NLU) forms the foundation of any effective conversational AI. The assistant must decode human language intricacies, including slang, idioms, and accents, to interpret requests correctly. Poor NLU can lead to misunderstandings, frustrating users and diminishing engagement.

Regularly updating NLU models with diverse training data ensures adaptability to evolving language trends. This dynamic approach enables the assistant to handle a broader range of inputs, creating more intuitive interactions.

Evaluating and Iterating on Performance Metrics

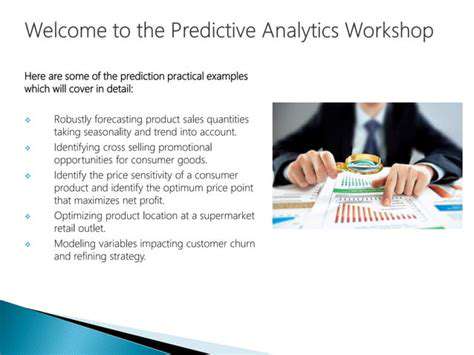

Understanding Performance Metrics

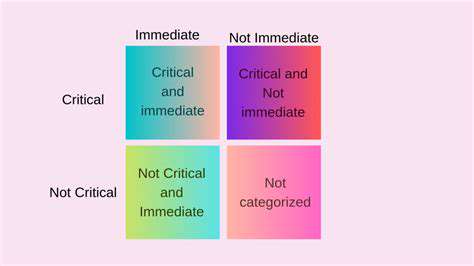

The first step in performance evaluation is identifying the right metrics. Key performance indicators (KPIs) must align with desired outcomes. For example, improving website conversions requires tracking bounce rates, session durations, and page views. Focused metric selection drives meaningful improvements.

Selecting relevant metrics is pivotal—arbitrary data tracking won’t yield actionable insights. Metrics must directly correlate with project goals to measure progress accurately.

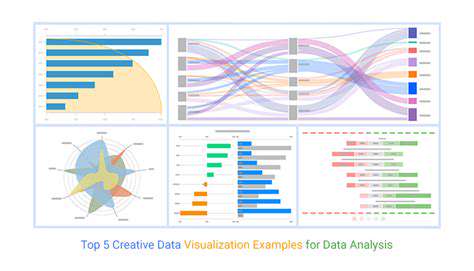

Analyzing Performance Data

After selecting metrics, structured data analysis is crucial. Identifying trends and patterns helps pinpoint improvement areas. Visual tools like charts simplify interpreting performance fluctuations, enabling data-driven decisions.

Correlating metrics reveals deeper insights. For instance, a conversion rate drop might link to reduced time on a specific page, guiding targeted enhancements to boost engagement.

Iterating and Implementing Improvements

Data analysis highlights areas for refinement, whether through strategy adjustments or new technologies. Successful iteration demands flexibility, testing hypotheses, and measuring outcomes. Implement changes incrementally, monitoring effects before full deployment.

Documenting adjustments and their impacts ensures sustainable progress. Ongoing evaluation keeps improvements aligned with long-term goals, adapting to changing needs.

Read more about Optimizing for Conversational AI Assistants

Hot Recommendations

- Personalizing Email Content with User Behavior

- Geofencing for Event Attendance Tracking

- Reputation Management on Social Media

- UGC Beyond Photos: Videos, Testimonials, and More

- The Future of Data Privacy Regulations

- Accelerated Mobile Pages (AMP) Benefits and Implementation

- The Future of CRM: AI and Voice Integration

- Google Ads Smart Bidding Strategies: Maximize Value

- Common A/B Testing Pitfalls to Avoid

- Local SEO Strategies for Small Businesses